Across boardrooms and executive meetings, AI is discussed with urgency but little shared clarity. One leader asks which AI platform to buy. Another asks how competitors are using copilots. A third wonders why pilot programs never translate into measurable revenue impact.

This confusion stems from a foundational mistake: treating AI as a collection of tools rather than as a business system. For enterprises, AI is not software to deploy. It is an operating capability that touches data governance, revenue models, compliance, and performance accountability. Without strategy, AI adoption becomes fragmented, risky, and expensive—regardless of how advanced the tools appear.

Why AI Tools Became the Default Enterprise Conversation

The loudest voices in the market focus on tools. Vendors, platforms, and product updates dominate headlines, each promising productivity gains and faster decisions. The narrative is simple and appealing: adopt the right AI tools and performance will follow.

Common claims include:

- AI copilots increase employee productivity

- Automation reduces operational cost

- Generative AI accelerates content, sales, and analytics

What is rarely stated is that these benefits assume something already exists: a clear operating model, clean data flows, defined accountability, and governance aligned to enterprise risk. Tool-first thinking thrives because it is tangible and fast. Strategy work is quieter, slower, and harder to market—yet far more decisive for long-term outcomes.

The Strategy Gap Enterprises Rarely Address

What most enterprises face today is not a tool gap, but a growing strategy debt. Strategy debt accumulates when AI initiatives launch without alignment to business objectives, operating models, or measurement frameworks.

Key dimensions of this gap include:

- Organizational readiness

AI performance depends on data maturity, decision rights, and cross-functional coordination. Many enterprises attempt AI initiatives without resolving data ownership, governance structures, or model accountability. - Governance before automation

Compliance, explainability, and auditability are often addressed after deployment—if at all. In regulated industries, this creates downstream risk that negates early efficiency gains. - Operating model misalignment

AI outputs frequently fail to integrate into workflows that actually drive revenue or performance decisions. Insights exist, but action does not. - Measurement ambiguity

Enterprises struggle to answer basic questions: Which AI initiatives impact revenue? Which reduce risk? Which merely add activity?

This is where AI Strategy Consulting becomes critical—not to select tools, but to define how AI fits into the enterprise’s economic engine. Without this foundation, tools amplify fragmentation rather than performance.

When AI Tools Create Revenue and Compliance Risk

Poorly integrated AI does not simply underperform—it actively leaks value. Revenue leakage occurs when AI-generated insights are disconnected from attribution, forecasting, and execution systems. Compliance risk grows when models operate without clear lineage or oversight.

Common failure patterns include:

- Sales and marketing AI operating on inconsistent data definitions

- Forecasting models that cannot be explained to finance or regulators

- Automated decisions without documented accountability

These issues are not technological failures. They are architectural ones. Enterprises often discover them only after scale, when reversing course is costly. A strategy-led approach addresses these risks early by aligning AI initiatives with revenue architecture, compliance frameworks, and performance metrics before deployment.

Why Scaling AI Fails Inside Large Organizations

AI pilots often succeed in isolation and fail at scale. The reason is structural. Large enterprises are complex systems with legacy data, multiple decision layers, and regulatory exposure.

Scaling fails when:

- Data pipelines cannot support enterprise-wide consistency

- Business units deploy AI independently, creating conflicting signals

- Leadership lacks a unified performance narrative tied to AI outcomes

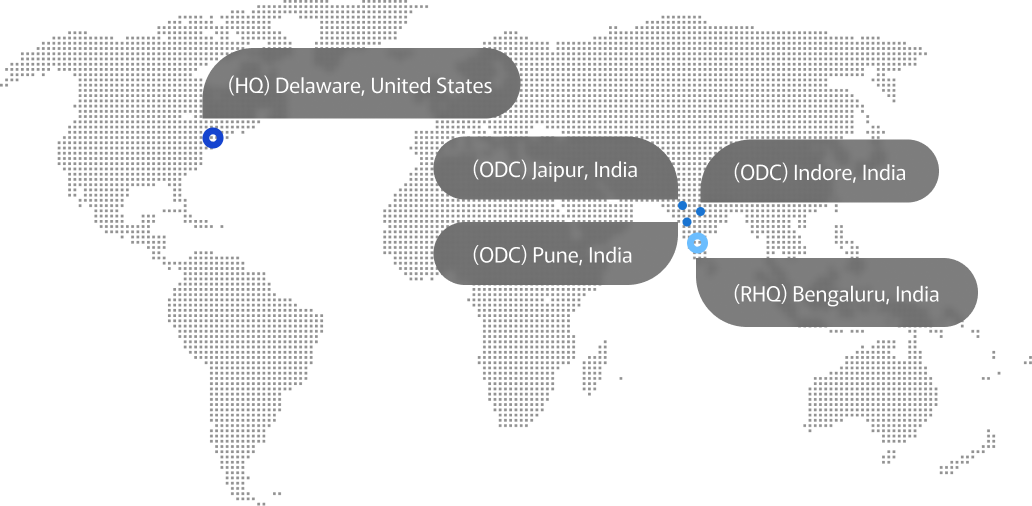

Advayan’s work with mid-to-large enterprises often begins at this inflection point—not to replace tools, but to rationalize them within a coherent strategy. The focus is on performance accountability, compliance-ready design, and modern revenue architecture that turns AI from experimentation into an enterprise capability.

The Market’s Obsession With Tools—and Why It Misses the Point

The market is saturated with comparisons: best AI platforms, top copilots, feature matrices. While useful at a tactical level, this framing obscures the real question enterprises must answer: What role should AI play in how we generate, protect, and measure value?

Tools change rapidly. Strategy endures. Enterprises that prioritize AI strategy over tool accumulation build systems that adapt—without restarting the conversation every budget cycle.

What an Enterprise AI Strategy Actually Looks Like in Practice

An enterprise AI strategy is not a roadmap of tools. It is a decision framework that governs where AI is applied, how outcomes are measured, and who is accountable when models influence revenue, risk, or customer experience.

At a practical level, effective AI Strategy Consulting focuses on four interconnected layers:

Business and revenue alignment

AI initiatives are mapped directly to economic outcomes:

- Revenue growth, protection, or acceleration

- Cost optimization tied to measurable efficiency

- Risk reduction with quantified exposure

Every use case is justified in financial terms, not technical novelty.

Data and governance architecture

Before scale, enterprises must define:

- Data ownership and stewardship

- Model explainability standards

- Audit trails for automated decisions

- Regulatory alignment across regions and business units

This is where many AI programs stall. Governance is treated as friction, when in reality it is what allows AI to operate safely at scale.

Operating model integration

AI outputs must enter real decision loops:

- Sales forecasts that inform inventory and pricing

- Marketing intelligence that feeds revenue attribution

- Risk models that align with compliance and finance

If AI insights do not change behavior, they do not create value.

Performance measurement and accountability

Enterprises need clarity on:

- Which models drive which outcomes

- How performance is tracked over time

- When models should be retired, retrained, or restricted

Without this, AI becomes an opaque layer that executives cannot confidently defend or scale.

This is the quiet work that rarely appears in vendor demos, yet determines whether AI becomes a durable enterprise capability or a recurring source of disappointment.

Conclusion

Enterprises do not fail at AI because the tools are inadequate. They fail because strategy is deferred in favor of speed. Tool-led adoption creates fragmentation, hidden risk, and diminishing returns at scale. Strategy-led adoption builds resilience, accountability, and long-term advantage. Organizations that treat AI as a governed business system—supported by experienced strategic partners—are the ones that convert experimentation into sustained performance. The difference is not ambition. It is discipline.