AI certifications are multiplying at a remarkable pace. New badges, micro-credentials, and “expert” labels appear weekly, promising readiness for an AI-driven future. For individual learners, this may feel empowering. For enterprises, it is quietly becoming a liability. Business leaders are discovering that certifications rarely translate into governed, compliant, revenue-aligned AI systems at scale. The issue is not talent scarcity or technical ignorance. It is the widening gap between what certifications validate and what enterprises actually need to deploy AI responsibly, profitably, and sustainably. Understanding this gap is now a strategic imperative—especially for organizations navigating regulatory pressure, performance accountability, and long-term AI investments.

The Explosion of AI Credentials and the Signal-to-Noise Problem

The AI certification market is experiencing classic credential inflation. As demand for AI skills surged, training providers responded by packaging narrow competencies into marketable credentials. The result is an oversaturated ecosystem where certifications no longer act as reliable signals of enterprise readiness.

Several patterns are now evident:

- Over-saturation: Similar certifications cover overlapping tools, models, or libraries with minimal differentiation.

- Low real-world transferability: Most programs emphasize isolated use cases rather than enterprise workflows.

- Rapid obsolescence: Curriculums struggle to keep pace with evolving models, regulations, and deployment architectures.

For enterprises, this creates confusion rather than confidence. A “certified” team may still lack the ability to deploy AI across business units, integrate it with revenue operations, or manage risk across the model lifecycle. Certifications validate exposure, not operational maturity.

Why Certifications Struggle in Enterprise AI Environments

Enterprise AI is not a skills problem; it is a systems problem. Certifications are inherently individual-focused, while enterprises operate through interconnected processes, controls, and incentives.

Most certifications fail to account for:

- Model lifecycle management: From data sourcing and training to monitoring, retraining, and decommissioning.

- Cross-functional dependencies: Legal, compliance, IT security, finance, and revenue teams all influence AI outcomes.

- Decision accountability: Who owns model performance when AI impacts pricing, forecasting, or customer experience?

Without these dimensions, certifications produce fragmented expertise. Teams may know how to build or prompt models, but not how to operationalize them within enterprise-grade guardrails. This is where many AI initiatives stall—after pilot success but before scalable impact.

The Hidden Risk: Governance Gaps Behind “Certified” AI

What almost no one discusses openly is how certifications can create a false sense of security. Leadership assumes that “certified” equals “safe,” when in reality governance risks often increase.

Enterprise AI governance requires:

- Compliance-by-design, not post-deployment fixes

- Policy-aligned model usage, mapped to regulatory obligations

- Continuous monitoring for bias, drift, and unintended outcomes

Certifications rarely cover these requirements in depth. They are not designed to align AI usage with compliance frameworks, revenue accountability, or audit readiness. As a result, organizations may deploy AI faster—but with greater exposure to regulatory scrutiny, reputational damage, or performance volatility.

In regulated industries, this gap is particularly dangerous. AI failures rarely stem from malicious intent or incompetence; they arise from uncoordinated adoption without governance architecture.

Enterprise Value Is Lost in Orchestration, Not Skill Shortage

A recurring misconception is that enterprises fail with AI because teams “don’t know enough.” In practice, most failures stem from lack of orchestration.

Orchestration means:

- Aligning AI initiatives with revenue and performance objectives

- Integrating governance into operating models, not overlays

- Ensuring accountability across data, models, and outcomes

When AI is treated as a collection of tools or certifications, value fragments. When treated as an enterprise capability, value compounds. This distinction separates experimental adoption from scalable transformation.

This is also where enterprise AI governance consulting becomes relevant—not as a training substitute, but as a structural layer that connects strategy, compliance, and performance into a coherent system.

What Executives Should Actually Evaluate Instead

Rather than asking how many certifications teams hold, executives are shifting toward more durable questions:

- Can our AI models be audited, explained, and governed at scale?

- Do AI initiatives tie directly to revenue impact and performance metrics?

- Are compliance, risk, and security embedded from day one?

- Can this system evolve as regulations and markets change?

These questions reflect maturity, not momentum. They acknowledge that long-term AI success depends less on individual credentials and more on enterprise design.

From Credentials to Capability: A More Durable AI Strategy

Enterprises that move beyond certification-driven thinking tend to converge on a different operating philosophy. They stop asking whether teams are “AI trained” and start designing how AI behaves inside the organization.

A capability-first AI strategy typically emphasizes:

- Governance as an operating system, not a compliance checkbox

- Model lifecycle ownership, with clear accountability from experimentation to retirement

- Revenue and performance alignment, ensuring AI investments move measurable business outcomes

- Scalability by design, so pilots do not collapse under enterprise complexity

This approach treats AI less like software and more like an evolving business asset. Skills still matter, but they are contextualized within guardrails, incentives, and decision rights. Certifications may support this ecosystem, but they cannot replace it.

In practice, organizations adopting this mindset shift investment away from fragmented upskilling toward architecture, orchestration, and governance frameworks that persist regardless of tools or models.

Why Compliance and ROI Are Now Linked in AI Decisions

One of the most consequential shifts in enterprise AI is the collapse of the traditional boundary between compliance and performance. Historically, compliance was seen as a cost center. In AI-driven systems, weak compliance directly erodes ROI.

Poorly governed AI leads to:

- Rework after regulatory findings

- Model shutdowns due to audit failures

- Loss of executive confidence in AI-driven decisions

- Delayed scaling despite technical readiness

Conversely, compliance-by-design accelerates adoption. When governance is embedded early, AI systems scale faster because they are trusted. This trust is not philosophical; it is operational. Revenue leaders are more willing to rely on AI forecasts, pricing models, or personalization engines when accountability and controls are explicit.

This is why enterprises increasingly evaluate AI initiatives through a dual lens: performance upside and governance resilience. Certifications alone rarely address this intersection.

The Quiet Mismatch Between Tool Expertise and Enterprise Reality

Much of the online discourse still celebrates tool fluency: learning the latest model, platform, or framework as quickly as possible. While this narrative dominates search results, it rarely reflects how enterprises actually operate.

Tool-centric certifications assume:

- Stable requirements

- Minimal regulatory friction

- Isolated use cases

Enterprise reality is different:

- Requirements evolve with market and regulatory shifts

- AI decisions affect customers, revenue, and compliance simultaneously

- Systems must integrate with legacy infrastructure and operating models

This mismatch explains why many organizations accumulate AI tools but struggle to generate durable value. The missing layer is not technical brilliance; it is strategic coherence.

How Leading Enterprises Are Reframing AI Maturity

More mature organizations are quietly reframing what “AI readiness” means. Instead of counting credentials, they assess:

| Dimension | Certification-Centric View | Enterprise Capability View |

| Skills | Individual tool proficiency | Cross-functional operating competence |

| Governance | Optional or post-hoc | Embedded and continuous |

| ROI | Assumed from adoption | Measured through performance impact |

| Risk | Managed by teams | Managed by system design |

| Scalability | Tool-dependent | Architecture-driven |

This reframing does not reject learning or certifications outright. It simply places them in proportion. Skills are inputs; orchestration is the multiplier.

Where Strategic Partners Quietly Make the Difference

Enterprises that succeed with AI at scale rarely do so through fragmented internal efforts alone. They work with strategic allies who understand how revenue, performance, compliance, and technology intersect.

In these relationships, the value does not come from templates or training materials. It comes from:

- Translating regulatory expectations into operational design

- Aligning AI initiatives with measurable business outcomes

- Designing governance frameworks that evolve with the enterprise

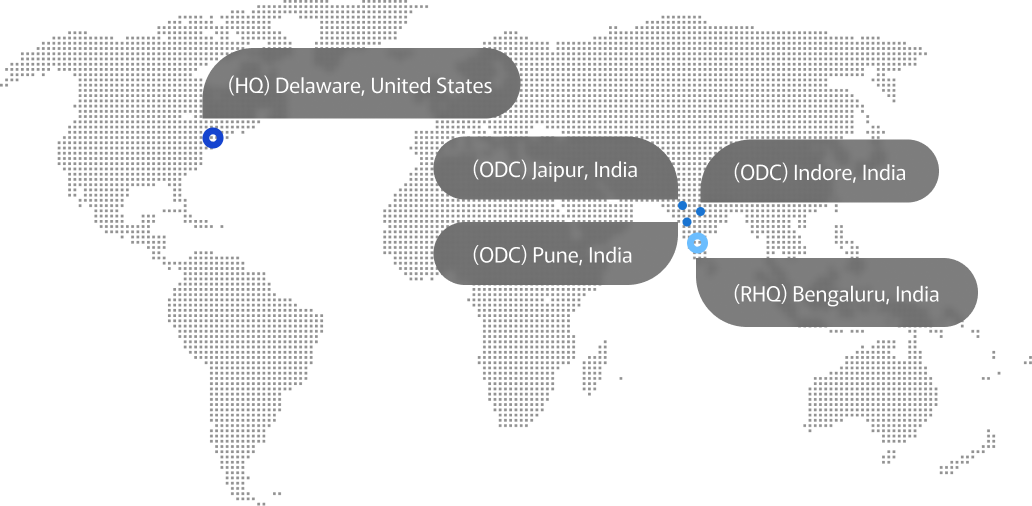

Firms like Advayan operate in this space—not as vendors pushing tools or certifications, but as partners helping organizations design AI systems that remain compliant, scalable, and economically sound over time. The differentiation lies in integration, not instruction.

Conclusion

AI certifications are not inherently useless. They are simply insufficient for enterprise-scale transformation. As AI becomes embedded in revenue engines, compliance frameworks, and executive decision-making, the limitations of credential-first thinking become unavoidable. Enterprises do not fail because they lack certified professionals; they fail because they lack orchestration, governance, and strategic alignment. The organizations that move past this phase will be those that design AI as a managed capability—grounded in performance accountability and compliance-by-design—rather than a collection of tools validated by certificates.