Generative AI adoption inside enterprises is accelerating faster than organizational skill maturity can realistically keep up. This gap is not primarily about a lack of technical talent or insufficient training budgets. It is about how quickly decision-making, execution, and accountability models are changing under AI-assisted workflows. Leaders are discovering that while tools are easy to deploy, the skills required to govern, integrate, and extract sustainable value from them are far more complex. The urgency is structural, not emotional. Enterprises that treat AI as a capability layered onto existing operating models risk slower execution, weaker controls, and diluted revenue performance. The skills conversation must move beyond reskilling checklists toward enterprise-wide readiness.

The AI Skills Conversation Everyone Is Having

Most executive discussions around generative AI focus on a familiar set of themes:

- Automation of repetitive tasks across marketing, finance, and operations

- Talent shortages for data science, AI engineering, and analytics

- Corporate reskilling and AI literacy initiatives

- Short-term productivity gains from copilots and AI assistants

These topics matter, but they are increasingly table stakes. Automation reduces effort, not accountability. Reskilling improves familiarity, not necessarily judgment. Productivity gains appear quickly but plateau when organizations hit structural constraints. This surface-level narrative explains what is changing, but not why so many AI initiatives stall after early pilots.

The Skills Problem Leaders Are Missing

The more consequential issue is not a skills gap, but skills decay. As AI systems absorb analysis, forecasting, and content generation, human skills erode unevenly across the organization. Decision-makers may retain authority while losing hands-on understanding of how outcomes are produced.

Skills Gap vs. Skills Decay

| Dimension | Skills Gap | Skills Decay |

| Definition | Skills never developed | Skills weaken over time |

| Visibility | Easy to diagnose | Hard to detect |

| Common Fix | Training programs | Operating-model redesign |

| Enterprise Risk | Slower adoption | Poor decisions at scale |

Skills decay creates fragile organizations that appear capable on paper but struggle under audit, market shifts, or regulatory scrutiny. Enterprises often discover this only after performance volatility emerges.

When Automation Becomes Decision Dependency

Generative AI does more than automate execution. It subtly reshapes how decisions are made. Over time, teams begin to defer judgment to AI-generated recommendations, forecasts, and narratives. This creates decision dependency, where human oversight becomes reactive rather than intentional.

From a revenue and performance perspective, this has several implications:

- Forecast accuracy improves initially, then degrades when assumptions shift

- Attribution models become opaque as AI intermediates insights

- Risk tolerance increases without corresponding governance maturity

Decision dependency is not a technical failure. It is an organizational design issue, requiring clarity around where AI advises, where humans decide, and how accountability is enforced.

Governance, Revenue, and Compliance Blind Spots

AI tool adoption often outpaces enterprise governance models. Different functions deploy tools independently, creating fragmented workflows and inconsistent controls. This sprawl introduces gaps that rarely surface in pilot phases.

Tool Adoption vs. Operational Readiness

| Area | Tool Adoption | Operational Readiness |

| Speed | High | Moderate |

| Visibility | Fragmented | Centralized |

| Compliance Control | Ad hoc | Embedded |

| Revenue Alignment | Indirect | Explicit |

Without alignment between AI usage and enterprise controls, organizations face revenue leakage, audit exposure, and performance inconsistency. Strategic consultancies increasingly see this pattern across industries: the technology works, but the enterprise system around it does not.

Why Training Programs Stall Without Operating Change

Training remains necessary, but insufficient. Teaching teams how to use AI tools without redefining workflows, incentives, and decision rights creates local efficiency and global confusion.

Tactical Fixes vs. Strategic Transformation

| Approach | Outcome |

| Tool-focused training | Short-term efficiency |

| Role-based reskilling | Improved adoption |

| Operating-model redesign | Sustainable performance |

| Governance integration | Scalable compliance |

Organizations that succeed treat AI readiness as an enterprise transformation effort, not a learning initiative. This is where systems-driven advisors quietly add value—connecting revenue, performance, and compliance considerations into a coherent operating model rather than isolated fixes.

The Market Noise Leaders Must Filter Carefully

The market is saturated with advice that treats generative AI adoption as a tooling problem. Lists of AI platforms, prompt libraries, and “quick win” playbooks dominate executive briefings. While not wrong, they are incomplete—and often misleading when taken out of a systems context.

Three patterns appear repeatedly:

- Tool-centric thinking that assumes better prompts equal better outcomes

- Role-agnostic upskilling that ignores revenue, compliance, and risk differences across functions

- Isolated productivity metrics that fail to connect AI output to business results

From a technical standpoint, prompt engineering is simply a user interface skill. It does not address data provenance, model drift, or downstream decision impacts. Enterprises that optimize for surface-level fluency often find themselves with faster workflows but weaker controls, especially in regulated or revenue-critical environments.

The signal for leaders is this: AI competence is not measured by how many tools are deployed, but by how reliably outcomes align with enterprise objectives.

Why Internal Teams Struggle to Self-Correct

Most organizations assume that misalignment will resolve organically over time. In practice, internal teams are constrained by incentives, silos, and legacy processes that predate AI entirely.

Several structural limits emerge:

- Functional silos optimize AI usage locally but degrade enterprise-wide coherence

- Legacy KPIs fail to capture AI-assisted decision quality or risk exposure

- Change fatigue limits appetite for operating-model redesign

Even highly capable teams struggle to step outside their own execution layers to redesign governance, revenue attribution, and performance management simultaneously. This is not a talent issue; it is a bandwidth and perspective issue.

Enterprises that adapt faster tend to introduce an external systems lens—one that connects AI capabilities to operating models, compliance requirements, and financial outcomes without being embedded in day-to-day execution.

From AI Adoption to AI Readiness

AI readiness is not a milestone. It is an ongoing capability that evolves as models, regulations, and markets change. The shift from adoption to readiness requires reframing success criteria.

Key indicators of AI readiness include:

- Clear decision boundaries between AI-generated insight and human authority

- Embedded governance that scales with tool usage

- Revenue models that reflect AI-assisted attribution and forecasting

- Performance management that rewards judgment, not just output speed

This reframing moves AI from an innovation agenda into core enterprise management. Organizations that make this transition earlier experience fewer downstream corrections and more predictable value creation.

The Quiet Role of Strategic Allies

In mature enterprises, the most effective AI transformations rarely announce themselves as transformations. They appear as steady improvements in execution clarity, audit confidence, and revenue consistency.

This is where consultative partners with cross-domain depth matter. Not as vendors, but as integrators of perspective—bridging technology, finance, operations, and compliance into a coherent system.

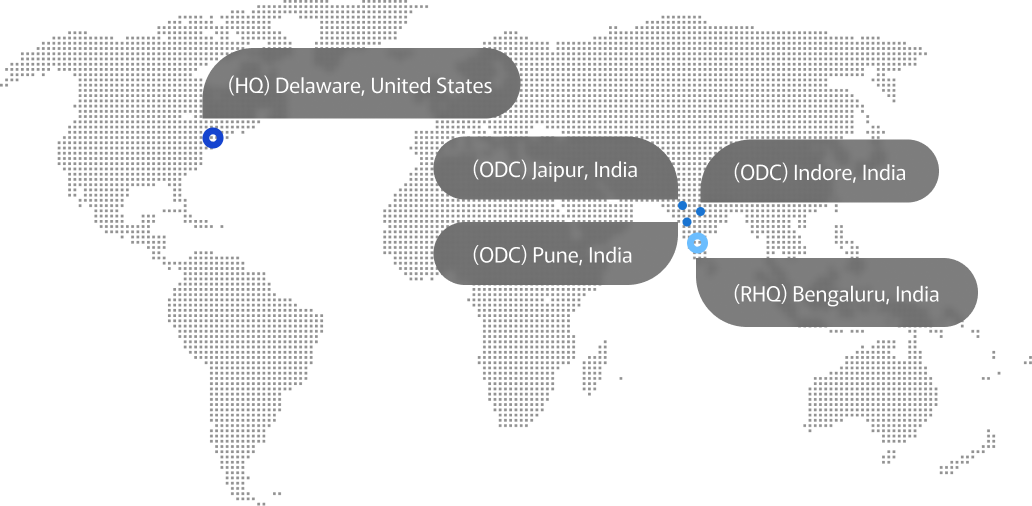

Firms like Advayan operate in this space by design. Their work focuses less on deploying AI and more on ensuring that AI strengthens, rather than fragments, enterprise performance. By aligning modern revenue models, performance frameworks, and compliance structures, organizations gain resilience alongside innovation.

The value is subtle but durable: fewer surprises, cleaner reporting, and leadership teams that understand not just what AI produces, but why.

Looking Ahead: Sustainable Advantage Over Speed

The enterprises that win with generative AI over the next decade will not be the fastest adopters. They will be the most deliberate designers. They will treat skills as living capabilities, governance as an enabler, and performance as a system—not a dashboard.

AI will continue to evolve. Skills will continue to shift. The differentiator will be whether organizations can adapt without destabilizing themselves in the process.

That is not a tooling challenge. It is a leadership one.