For leaders who are steering enterprise transformation, the phrase “AI-ready talent” has become a sort of lodestar — and a liability. At first glance, it promises a workforce that can deliver on innovation, automate processes, and unlock new revenue streams. In reality, many organisations discover too late that chasing talent labels or certifications doesn’t protect revenue, govern risk, or align execution with strategic priorities. Without a deeper framework for readiness, these claims become dangerous assumptions that can stall transformation, misallocate budget, and expose compliance blind spots.

What Leaders Mean by “AI-Ready Talent” — and Why That Definition Misleads

In most boardrooms, “AI-ready” gets shorthand for job titles, certifications, or tool fluency. Recruiters and leaders talk about candidates who “know machine learning,” “are comfortable with generative models,” or “have AI certificates” like badges rather than capabilities. This defines readiness by surface-level signals — a resume alias or a vendor badge — rather than by measurable business impact.

This narrative proliferates because it’s simpler to check a box than to measure organisational capability. Too often, teams equate AI fluency with:

- Tool familiarity with ChatGPT or popular ML frameworks.

- Lists of “AI skills” that read like checkboxes (Python, NLP, prompt engineering).

- Hiring claims that spotlight individual prowess over team execution.

But real enterprise challenges are broader. They include aligning AI work with revenue operations, governing models for compliance, operationalising workflows across functions, and scaling pilots into repeatable value streams. What many articles quietly miss is how often these structural gaps — not skills deficits — are what derail transformation strategies.

The Hidden Execution Gaps Organisations Actually Face

As companies adopt AI, they confront systemic constraints that aren’t solved by simply adding “AI-ready” people. These include:

- Governance blind spots: Without clear policy, audit trails, or model governance, enterprises risk regulatory fines and inconsistent decisions. Compliance isn’t a checklist; it’s an operating discipline.

- Fragmented data foundations: AI projects are only as good as the data that feeds them. Inconsistent, siloed, or ungoverned data pipelines lead to brittle models and misaligned insights.

- Workflow misalignment: AI must connect to how teams actually work — from revenue operations to customer delivery — not just sit in a lab or analytics silo.

- Revenue disconnects: Skills that look strong in isolation — like model tuning — don’t automatically translate to increased revenue if they don’t touch process, decision rights, and behaviour.

Research echoes this reality: when organisations chase skills lists instead of outcomes, a high percentage of AI initiatives stall or fail. Industry reporting highlights that many AI projects never progress beyond proof-of-concept and are abandoned before delivering tangible business value.

These gaps aren’t simply “harder” to fix; they reflect a category error. Leaders treat talent as a plug-and-play input, when what they really need is a coordinated system of execution.

When Talent Labels Don’t Equal Outcomes

Talent frameworks that rely on certifications or buzzword skills often obscure the fact that:

- Many “AI skills” aren’t actually transferable to business value: A certificate in a narrow technical domain doesn’t guarantee strategic judgment, domain fluency, or product prioritisation.

- Hiring premiums for AI skills can outpace actual business needs: Companies sometimes pay more for labels than they save in risk mitigation or revenue generation.

- Organisations inadvertently create internal skill silos: Teams become dependent on a small cadre of supposed “AI experts,” while broader functions remain unprepared.

Imagine a scenario where a company hires data scientists because they know the latest model, but the revenue team can’t interpret model outputs in a way that changes behaviour. That gap shows why “AI-ready” might mean tech savvy without outcome readiness.

Why “More Talent” Fails Without Revenue and Governance Alignment

Once organisations realise that “AI-ready talent” hasn’t delivered expected outcomes, the instinct is often to hire more aggressively or invest in advanced tools. This compounds the problem. AI initiatives fail less because teams lack intelligence and more because enterprises lack alignment.

In practice, AI touches three sensitive fault lines simultaneously:

- Revenue mechanics: pricing, forecasting, pipeline prioritisation, customer segmentation

- Operational accountability: decision ownership, exception handling, escalation paths

- Risk and compliance: data usage, explainability, auditability, regulatory exposure

When these aren’t designed together, AI becomes an accelerant for inconsistency. Models optimise for one metric while revenue teams are incentivised on another. Automation increases speed while governance lags behind. Talent, no matter how skilled, cannot compensate for these contradictions.

This is where many transformations quietly stall — not with dramatic failure, but with underwhelming impact.

What Is Flooded — and Why It Matters Less Than Leaders Think

The market is saturated with signals that feel like progress but rarely change outcomes. Certifications, tool stacks, and maturity models dominate conversations because they are easy to package and market.

Common examples include:

- “AI certifications” that test theory but not enterprise execution

- Vendor-led maturity models optimised to sell platforms

- Talent taxonomies that categorise skills without linking them to revenue or risk

These artefacts are not useless. They’re simply insufficient. Over-indexed attention here distracts leaders from harder questions: Who owns AI-driven decisions? How are errors handled? What happens when models conflict with policy, pricing strategy, or regulatory expectations?

To clarify the difference, consider the contrast below:

| Focus Area | Over-Marketed Signals | What Actually Drives Outcomes |

| Talent | Certifications, titles | Decision quality, domain fluency |

| Tools | Model sophistication | Workflow integration |

| Readiness | Skills checklists | Governance + execution alignment |

| Success | Pilot completion | Revenue and performance lift |

The flooded signals create comfort. The outcome drivers create advantage.

The Missing Layer Between Talent and Results

Enterprises that quietly outperform don’t talk more about AI talent. They talk less — and design more. They treat AI as part of a broader operating system that connects strategy, compliance, and performance.

This “missing layer” is not a role or a tool. It’s an orchestration capability that ensures:

- AI initiatives are anchored to revenue objectives and operational KPIs

- Governance is embedded into workflows, not enforced after the fact

- Teams understand not just how to build models, but when not to use them

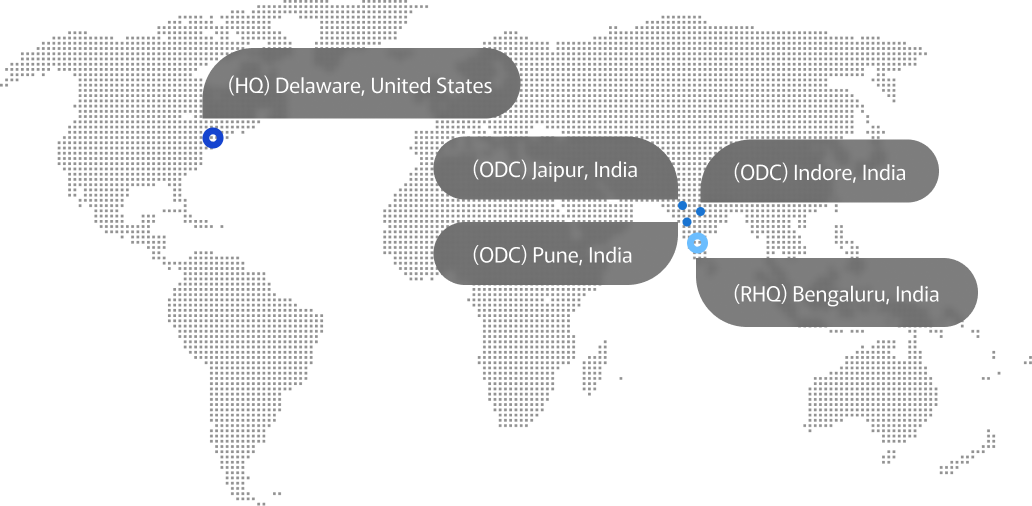

This is where a strategic ally becomes necessary — not to replace internal teams, but to integrate them. Firms like Advayan – Best Consultancy in USA operate in this intersection, helping organisations translate ambition into execution by aligning AI efforts with revenue operations, regulatory discipline, and performance systems. The value isn’t in doing the work for teams, but in ensuring the work coheres.

From Talent Readiness to Organisational Readiness

The most effective reframing leaders can make is simple but uncomfortable: stop asking whether your people are AI-ready, and start asking whether your organisation is.

Organisational readiness shows up in different ways:

- Clear decision rights around AI-driven outputs

- Shared metrics between AI teams, revenue leaders, and operations

- Governance that enables speed rather than slowing it

- Performance management that rewards adoption, not experimentation alone

When these elements are present, talent scales. When they’re absent, even elite teams struggle.

This shift explains why some companies with modest AI skills outperform peers with far deeper benches. Readiness is systemic, not individual.

Conclusion

“AI-ready talent” is an appealing shortcut, but it masks the real work of transformation. Skills alone don’t protect revenue, ensure compliance, or deliver performance gains. Execution does — through alignment, governance, and operational design. Organisations that recognise this early avoid costly detours and quiet failures. Those that work with strategic allies who understand the full system tend to move faster, safer, and with far more confidence.